Stripe is a financial firm, which runs CTF competitions of highest quality. This year, around today (22 Jan) they launched their third CTF, which is based on distributed computing (the top topic of the era!). The CTF is available at https://stripe-ctf.com

On their previous web hacking CTF, unfortunately my uncle had passed away, and I had very little time with being responsible for the funeral and all, and finished it in a day, the writeup of which is available here; and won the Stripe T-Shirt (sent to Iran, where I resided back then).

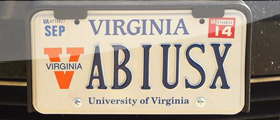

I started this one in the middle of Nicholas Christin‘s presentation today. He was at UVa today, presenting some of his illicit supply chain management tracking research to us, which was absolutely lovely. It’s also worth mentioning that he was my CMU advisor. On this CTF so far, I’ve been on the top 20 leaderboard list, but unfortunately I have to quit competing to get to the rest of my life (research), but I loved this CTF as much as their previous ones!

* * *

Level 0

There’s a piece of ruby code, that is apparently too slow, and you need to understand it and make it perform better. The code is:

#!/usr/bin/env ruby

# Our test cases will always use the same dictionary file (with SHA1

# 6b898d7c48630be05b72b3ae07c5be6617f90d8e). Running `test/harness`

# will automatically download this dictionary for you if you don't

# have it already.

path = ARGV.length > 0 ? ARGV[0] : '/usr/share/dict/words'

entries = File.read(path).split("\n")

contents = $stdin.read

output = contents.gsub(/[^ \n]+/) do |word|

if entries.include?(word.downcase)

word

else

"<#{word}>"

end

end

print output

What it does is essentially reading the dictionary file (available on UNIX systems, having a list of all English words each on a separate line) and storing it in an array. Then it reads a text from STDIN, and for each word (word boundaries are space and enter, as defined in the gsub regex) if its found in the words (entries) array, outputing the word intact, otherwise inside <> marks.

The bottleneck here is that for each word in the text, the whole dictionary array is scanned. The best approach would be to construct a dictionary (hashmap, dictionary tree or any other compatible data structure) from the entries and then search inside that, changing O(n) code to O(1).

The resulting code would be:

path = ARGV.length > 0 ? ARGV[0] : '/usr/share/dict/words'

entries = File.read(path).split("\n")

t= Hash.new

entries.each{ |entry| t[entry]=1}

contents = $stdin.read

output = contents.gsub(/[^ \n]+/) do |word|

# if entries.include?(word.downcase)

if (t[word.downcase]==1)

word

else

"<#{word}>"

end

end

print output

There’s room for further optimization on this code, the furthest dealing with storing the memory image of the hashmap, and reading it instantly on launch, making the code run instantly (O(1)).

SPOILER ALERT: Stripe CTF is a lot of fun, and there are very few CTFs of this quality. If you wanna enjoy it, stop reading and start trying to solve the questions on your own.

Level 1

This is a very interesting and somewhat hard question! Bitcoin is a money protocol, invented some years ago. When I first got to study Bitcoin, one Bitcoin was worth around $90, now it’s worth around $1000 and is growing; making it obviously interesting. The basic idea behind Bitcoin is, if you transfer your money to someone else, this transaction is recorded in a Ledger, and this record is signed with a hash that is computationally hard to compute, and this Ledger is shared by a lot of people, disabling forgery. The chain of changes to the Ledger are also stored.

Now the challenge is implementing a similar protocol, simplifying it in two aspects, first the ledger is not distributed and it located on stripe’s server, and second the computationally complex part is actually not that complex (something around 4 million SHA1 hashes).

The Git protocol, stores commits inside local objects. Each file is committed into a blob object, each directory structure in a tree object, and each commit metadata in a commit object. Each object is assigned an SHA1 hash, computed in some specific way. A commit’s hash is computed by hashing the following data record:

- Hash of its parent commit (hence chaining commits in history)

- Hash of the tree object where it resides (root repository folder)

- Commit Metadata (username, time, description, etc.)

Now you have to add a coin to yourself, by adding your name to the ledger.txt file with the number 1 in front of it, and committing the change. To make this computationally hard, the server rejects any commits that produce a commit object hash bigger than 000001 (append with 35 zeroes if you wish).

Lets figure out how hard this computation actually is. To do this, we need to compute how many 6 digit hexadecimal strings are smaller than 00000100…., i.e they are 000000xxxx… . Each hexadecimal digit is 4 bits, thus 5 of those make up for 20 binary bits, and the final 1 accounts for 3 more bits (the last bit is already defined, 3 are left). 23 binary bits, make up for a computational space of 223, roughly equal to 103*23. For a 50% chance of breaking, we need to compute half this space, which is roughly 4 million different hashes.

Since SHA1 is one of the cryptographically secure hash functions (i.e it doesn’t have a known cryptographic weakness, but does have computational power weaknesses) we can just change a number in our data and re-hash to get different (hopefully) uniform hashes, and after 4 million tries, get a hash that is below the desired threshold.

Since we can not change the parent hash, or the tree structure hash, we have to change the description, simply by appending it with a number (sequential or random, more on this later).

The initial BASH code achieves this, but as stated is too slow to be useful:

#!/bin/bash

set -eu

if [ "$#" != 2 ]; then

echo >&2 "Usage: $0 <clone_url> <public_username>

A VERY SLOW mining implementation. This should give you an idea of

where to start, but it probably won't successfully mine you any

Gitcoins.

Arguments:

<clone_url> is the string you'd pass to git clone (i.e.

something of the form username@hostname:path)

<public_username> is the public username provided to you in

the CTF web interface."

exit 1

fi

export clone_spec=$1

export public_username=$2

prepare_index() {

perl -i -pe 's/($ENV{public_username}: )(\d+)/$1 . ($2+1)/e' LEDGER.txt

grep -q "$public_username" LEDGER.txt || echo "$public_username: 1" >> LEDGER.txt

git add LEDGER.txt

}

solve() {

# Brute force until you find something that's lexicographically

# small than $difficulty.

difficulty=$(cat difficulty.txt)

# Create a Git tree object reflecting our current working

# directory

tree=$(git write-tree)

parent=$(git rev-parse HEAD)

timestamp=$(date +%s)

counter=0

while let counter=counter+1; do

echo -n .

body="tree $tree

parent $parent

author CTF user <[email protected]> $timestamp +0000

committer CTF user <[email protected]> $timestamp +0000

Give me a Gitcoin

$counter"

# See http://git-scm.com/book/en/Git-Internals-Git-Objects for

# details on Git objects.

sha1=$(git hash-object -t commit --stdin <<< "$body")

if [ "$sha1" "<" "$difficulty" ]; then

echo

echo "Mined a Gitcoin with commit: $sha1"

git hash-object -t commit --stdin -w <<< "$body" > /dev/null

git reset --hard "$sha1" > /dev/null

break

fi

done

}

reset() {

git fetch origin master >/dev/null 2>/dev/null

git reset --hard origin/master >/dev/null

}

# Set up repo

local_path=./${clone_spec##*:}

if [ -d "$local_path" ]; then

echo "Using existing repository at $local_path"

cd "$local_path"

else

echo "Cloning repository to $local_path"

git clone "$clone_spec" "$local_path"

cd "$local_path"

fi

while true; do

prepare_index

solve

if git push origin master; then

echo "Success :)"

break

else

echo "Starting over :("

reset

fi

done

Before explaining the code, it is worth mentioning that they have a good code that mines Gitcoins every 15 minutes (i.e changes the ledger and pushes it to the repo), making your parent hash differ, and forcing you to start over. This means that your code should be fast enough not to be bothered by a 15 minutes threshold.

This code is divided into 4 sections, 3 of which are functions. The first section, clones the repo again in another folder (not to mess with your codes and current repo that its running on), and then calls the other three functions inside a loop.

The first function, prepare_index, adds your name to the ledger, and commits it. Those two fancy lines in it are simply adding a string to the file (its sometimes very messy to do this with bash code). The last function, reset, checks the online repo, and if things are changed (by the BOT miner), pulls those changes here. The third function, solve tries to find an appropriate hash, by constructing different descriptions.

Now these three are in a loop, because when solve succeeds, you might have gotten past your 15 minutes, and your hash may be useless, and the push might fail, so it tries again. Don’t bother running this though, its small enough not to let you find a single hash, we’ll get to why later.

What solve does is basically constructing the metadata string in body variable, composed of parent hash, tree hash, timestamps and some description. The description is appended with a counter, to have it result in different hashes over iterations.

Since we don’t know how Git computes those hashes, we ask it to generate one for us. The following line:

git hash-object -t commit --stdin <<< "$body"

has three important components, first it computes an object-hash for us. We tell the object type by -t switch (remember those three kinds of git objects?), and without a -w switch, it just computes the hash without committing anything. We give it our data record as input. If the resulting hash from this line is smaller than 000001 (lexicographically speaking), we’re good, so we commit with the description, and try to push stuff.

Remember that we need to repeat this process for roughly 4 million times, but this process is not some computational code, its bash code, and each statement in it involves a process spawn in the system! Spawning system processes is a very heavy task, and hence extremely slow. What we need to do is, reproduce this whole code (at least the part of it that is inside a loop in solve function) in another programming language. The hard part of doing that is, generating those git hashes, because git application was doing it for us before, and now we need to figure out how to do that on ourselves.

The git internals book has a section on this http://git-scm.com/book/en/Git-Internals-Git-Objects, but its not enough. It tells one how objects are stored and handled, and finally how one can create a blob object and hash using Ruby, but doesn’t mention how commit object hashes are made, which is what we need for this problem.

The ruby code for this would be as follows:

system('./prepare.sh "checkout-url" ledger-username') #prepare.sh file later

Dir.chdir Dir.pwd+"/temp" #change directory to temp

tree =`git write-tree`.delete("\n") # no need to know internals of this, its only run once

parent=`git rev-parse HEAD`.delete("\n") # same here

timestamp=`date +%s`.delete("\n")

difficulty="000001"

counter=0

base = "tree #{tree}

parent #{parent}

author CTF user <[email protected]> #{timestamp} +0000

committer CTF user <[email protected]> #{timestamp} +0000

Give me a Gitcoin

" # moving this out of the loop makes the code run much faster, because this part never changes

require 'digest/sha1'

while true do

counter+=1

content=base+counter.to_s+"\n"

store = "commit #{content.length}\0" + content # this part is important

sha1 = Digest::SHA1.hexdigest(store)

if (sha1<difficulty)

print sha1+"\n";

break

else

if (counter%100000==0)

print counter.to_s+"\n";

STDOUT.flush()

end

end

end

File.open("t.txt", 'w') {|f| f.write(content) } #put the commit body in file t.txt

sha2=`git hash-object -t commit t.txt`.delete("\n") # make sure that git computes the same hash

if (sha1==sha2)

system("git hash-object -t commit -w t.txt") # and commit the changes

system("git reset --hard '#{sha1}' > /dev/null")

else

print "Invalid!\n"

end

After running this code, the correct SHA1 hash will be printed, and the changes will be committed. The code provides visual feedback every a hundred thousand hashes, which takes a tenth of a second on my machine.

The major problem with writing this code is figuring out the line commented as “important”. There is no mention of how the string needs to be generated and then hashed, so we seek help from reverse engineering.

To figure this part out, add your name to the Ledger.txt file, commit the changes using git commit -m “MSG” -a, and note the resulting hash (it only outputs a few digits of that hash, because the odds of two hashes colliding is so small). For example lets assume that the output hash was abcdef01, run the following code:

python -c "import zlib,sys;print repr(zlib.decompress(sys.stdin.read()))" < .git/objects/ab/cdef01...

Pressing tab fills the rest of filename. We need this python code snippet to unzip the file, which is apparently zipped using zlib as mentioned in the internals book (a command line zlib tool is hard to come by). The result should be like:

commit 198\x00tree 10fe092c5bbdd19a8550f6b1976babc659fe82f0\nparent 000000d9e8c29e90b3f7341da2a4b0a72043ea48\nauthor AbiusX <[email protected]> 1390441995 -0500\ncommitter AbiusX <[email protected]> 1390441995 -0500\n\nMSG\n'

As you can see, its almost like the blob format, but instead has the word commit in place. You might be lucky and get it with trial and error, but I wasn’t.

This is the contents of prepare.sh:

#!/bin/bash

set -eu

if [ "$#" != 2 ]; then

echo >&2 "Usage: $0 <clone_url> <public_username>

A VERY SLOW mining implementation. This should give you an idea of

where to start, but it probably won't successfully mine you any

Gitcoins.

Arguments:

<clone_url> is the string you'd pass to git clone (i.e.

something of the form username@hostname:path)

<public_username> is the public username provided to you in

the CTF web interface."

exit 1

fi

export clone_spec=$1

export public_username=$2

rm -rf temp

git clone $clone_spec temp

cd temp

perl -i -pe 's/($ENV{public_username}: )(\d+)/$1 . ($2+1)/e' LEDGER.txt

grep -q "$public_username" LEDGER.txt || echo "$public_username: 1" >> LEDGER.txt

git add LEDGER.txt

I have a fast connection, so I just clone the repository everytime I want to run this script, instead of checking for changes. This whole hash generation process takes a few seconds on my system, then all I need to do is type git push in terminal, and I’m good to go. In case you’re not, re-do the whole process (the 15 minutes BOT just killed you). If you’re system is too slow, change counter=0 with counter=rand(1000000000) in your Ruby code, and spawn 4 or more ruby processes (as many cores as you have).

Bonus round: After you win this, you’re granted access to a repo which is shared between all contestants, all mining off it. The fastest code prevails. If I wanted to win for sure, I’d use a OpenCL SHA1 implementation, like Cryptohaze. That thing is at least a thousand times faster (taking a tiny fragment of a second to solve this problem); but who cares, its not real money.

Level 2:

This is a simplified DDOS scenario. Your role is to implement a proxy that detects BOTs and drops them, because everything that reaches the backend server consumes a lot of resources. Everything is in Javascript, to give it a fragile touch too. Just uncomment the takeRequest method inside shield file:

Queue.prototype.takeRequest = function (reqData) {

// Reject traffic as necessary:

if (currently_blacklisted(ipFromRequest(reqData))) {

return rejectRequest(reqData);

}

// Otherwise proxy it through:

this.proxies[0].proxyRequest(reqData.request, reqData.response, reqData.buffer);

};

You essentially need to implement the function currently_blacklisted, but we’re gonna two more steps to further optimize code. You might even get past this challenge just by implementing currently_blacklisted as follows:

function currently_blacklisted(ip) { return (Math.random()<.5); }

That’s because you drop 50% of the load, and the server is no longer overloaded that much! But lets get serious and see some real candy (add the following code):

var ip_pool={};

var total_reqs=0;

var unique_reqs=0;

var active_requests=0;

function currently_blacklisted(ip)

{

total_reqs++;

if (ip in ip_pool)

ip_pool[ip]++;

else

{

unique_reqs++;

ip_pool[ip]=1;

}

if (ip_pool[ip]<total_reqs/unique_reqs/5)

return false;

return true;

}

What we do here is three things, if the IP is new in our list, we add it to list, and count a unique IP, if it existed, we increase its number, and count total requests, and if this IP has used more than a fifth of per-capita requests, its a benign one. The 5 factor was computed with experimentation (though it was the first thing I tried).

Don’t get too excited now, no one will ever be able to stop a DDOS attack using this approach. There are 4 billion IP4 addressed on the Internet, and your computer can not have enough memory to account for all of them; this is just a game.

Now lets further enhance this code, by changing the uncommented part of the existing code to the following:

Queue.prototype.takeRequest = function (reqData) {

// Reject traffic as necessary:

if (currently_blacklisted(ipFromRequest(reqData))) {

if (active_requests>2)

return rejectRequest(reqData);

}

// Otherwise proxy it through:

active_requests++;

this.proxies[Math.floor(Math.random()*2)].proxyRequest(reqData.request, reqData.response, reqData.buffer);

};

Queue.prototype.requestFinished = function () {

active_requests--;

return;

};

Two things are new, first we implemented load balancing (we had two servers, remember?) by just randomly passing the request to one of the two. Second, we account for the number of active requests (those being processed by the backend), so we know how busy the backend is, and if the active requests are none or only one, even if they are DDOS requests, we pass them along to the backend just to prevent idleness!

Overall, these few changes should give on a score of 150+.

Level 3:

Let me warn you before we get to this level, if you’ve never done functional programming, or Scala programming, or both, and if you’re not familiar with ways to write functional code that parses strings, you’re doomed and stuck at this level for good. I’m no gonna give you a copy-paste solution, because I don’t have one.

This level has an Scala application, with a bunch of file structures spanning multiple text files. The Scala code lists each file in the directory structure in an Index object, and when searching for a word, uses this list to read each and every file and scan every single line of that file for the text. Now I have a super-fast SSD drive, and without any changes, I got a score of near 100 (identical to the benchmark code), but to pass this level, you need to get at least 400.

Before getting there, know that this project uses SBT, a Scala project management system, which is very ugly, stupid, slow and stupid. I’ve used it forever, and hated it forever. Before running the harness code on this, you should try to run a single server-instance, just to have SBT install all dependencies, and compile everything. Whenever you change a single line of code, you should do this again to compile and update the structure, and then run the tests. I personally think that Scala has all the badness of Java, plus all the worst of functional programming languages in one places. With all those nice and fast systems, why would someone want Scala!? (I’ve done a bunch of trainings on it a few years ago)

You need to deal with two files out of all the files in the project, namely index.scala and seacher.scala. The Index file, is in charge of listing the files. You should change it to store more information (preferably a shared inverted index, because there’s not enough memory to have an inverted index per file). The Searcher class, searches for a word by checking to see if its inside a file’s whole content, and if it is, breaks the file to an array of lines, checking each one to figure out the line. This should be changed, to just perform a lookup on the inverted index hash map, and return the filename and line number.

The first link https://gist.github.com/willf/1514740 gives you a short inverted index solution in Scala, but you need to generate it for each file, and merge the indexes for the set of files. The second link http://twitter.github.io/scala_school/concurrency.html gives you a simplified solution, helping you understand, and then deals with its race conditions (yes they exist in functional code too!). Fortunately, the base code uses the Broker pattern to reduce race conditions. After a proper indexation, you can change searcher’s tryPath method with the following:

def tryPath(path: String, needle: String) : Iterable[SearchResult] = {

return index.find(path,needle); }

It’s too late tonight, but I’ll update this post later with the updated code for this level, and the next level.

4 comments On Stripe CTF 3 Web Challenges Writeup

update plx

Using your example for Level0, simply setting the Hash without nils will make it run faster.

t= Hash.new{0}

I agree with you that scala is the combination of all the worst features in

languages. For me it is the ‘C++’ in the Java World. I wast a lot of time

with the level 3. My machine was to slow for the overbloated sbt build process. I give up to the ctf3. I tried inverted index, trigram and suffix arrays but the memory blows up or it was even to slow (under 100).

Good Luck !

rpu: Have you tried modifying bin/start-server? Change the -Xmx argument to something larger such as -Xmx400m

Sliding Sidebar